ASP.NET Web Forms modernization series, Part 2: Business logic layer considerations

This is part 2 in a series of blog posts dedicated to ASP.Net Web Forms modernization. The series expands on the topics discussed in our Migration Guide: ASP.Net Web Forms to Modern ASP.NET whitepaper – a compilation of technical considerations for modernizing legacy .NET web applications, gathered from our experience at Resolute Software doing many .NET modernization projects.

Here is a list of all articles in the ASP.Net Web Forms modernization series:

- Part 1 - Data Access Layer Considerations

- Part 2 – Business Logic Layer Considerations (you are here!)

Coming up next (stick around!):

- Part 3 – UI Layer Considerations

- Part 4 – Single Page Application Framework Considerations

- Part 5 – Server Application Considerations

- Part 6 – Testing, Deployment and Operational Considerations

Intro

Modernizing your legacy .NET application is a process with many moving parts. The business logic layer is potentially the most complex area of your system overall, containing your domain model, entities, data transfer objects (DTOs) and your business services. Combined, they constitute the sum of all useful computations done by your application, the core of its added value to the business. Modernizing all that complexity requires a careful approach, with a focus on preserving the behavior of your business logic with a minimum of breaking changes and reusing as much of the already existing code as possible.

N-tier architecture benefits

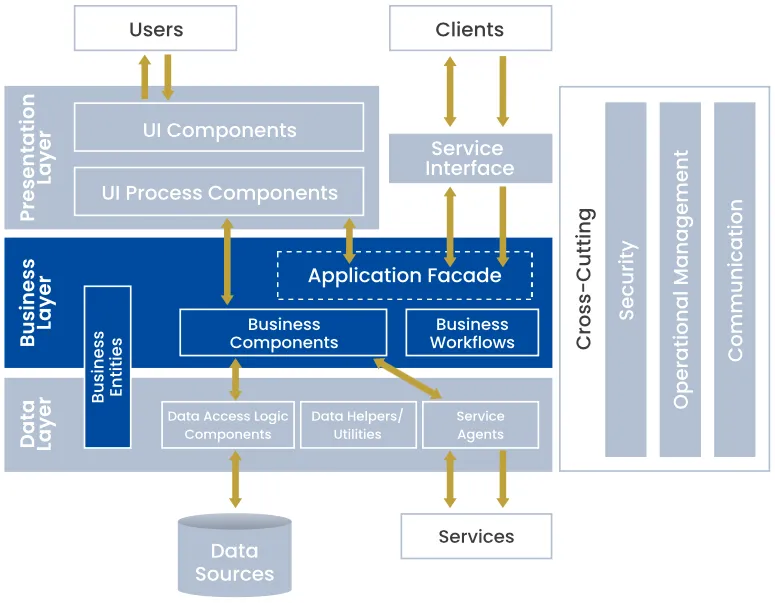

As software architects, we often talk about the benefits of an N-tier (or multitier) architecture. Layering your application is a fundamental principle in software design. An application is divided into multiple logical layers (or tiers) with separate responsibilities and a hierarchical dependency chain.Each layer above can use the layer below, but not the other way around.

A traditional three-tier application has a presentation, a business, and a database tier. More complex applications can have additional tiers, e.g., a data access tier that separates the database from the business logic tier by abstracting concepts like data entities, read and update queries. We always recommend having a separate data access layer, the requirements for which we discuss in detail in part 1 of this blog post series.

N-tier applications promote a clear separation of concerns and introduce source code areas (tiers) that can grow and evolve independently of each other. This concept reduces the system’s overall complexity, enhances the maintainability of the code, and allows developer specialization, thus increasing the agility of the software team.

Segregate further within the business logic layer

Taking a step closer look into the N-tier application architecture, we can divide the business logic layer further into smaller sub-areas of code (system components) with a single purpose and clear dependencies to other components:

- Entities – plain model classes, data containers and DTOs, representing domain entities

- Business services – domain-specific business logic classes

- Interfaces – the public API façade of the business layer, facilitating decoupled system components through dependency injection

- Other system objects – domain events, exception classes, containers and aggregates, value objects and domain settings – additional players in your domain model and required artifacts in an enterprise service architecture

Software projects that organize business logic code using the above or a similar segregation pattern lay the foundation of a system that is easy to maintain, easy to extend, and, when the time comes, easy to modernize into the latest framework versions and other modern technologies.

Assess the business logic modernization complexity

Legacy .NET applications with a well-maintained N-tier architecture are a best-case scenario for software modernization. The system already promotes a clean architecture with well-segregated layers that can be reused directly or refactored lightly into a modern version of .NET. Legacy N-tier applications are a low-hanging fruit from a modernization standpoint. They present the best code reuse opportunity and require the least amount of refactoring, reducing the possibility of breaking changes or missing functionality in the modern version of the application.

Other legacy architecture scenarios may not be as flexible for software modernization. An extreme worst-case scenario could be, for example, an ASP.NET Web Forms application with no clear business logic segregation, where all business logic and data access code is written directly in the ASPX code-behind classes (classes in the UI-layer). That architecture would require massive refactoring to segregate code into different application tiers (data access, business logic)before the legacy Web Forms technology could be replaced with a modern .NET equivalent like .NET MVC or a single page application (SPA) framework. Tiering would be a prerequisite to modernization.

When creating a modernization strategy for a legacy .NET application, to get a good idea about the best modernization approach, software architects at Resolute Software often do an assessment of the refactoring complexity of the legacy system. Estimating the refactoring complexity at a high level is a useful tool to gauge the development effort required to fully modernize a legacy .NET application like Web Forms or Silverlight.

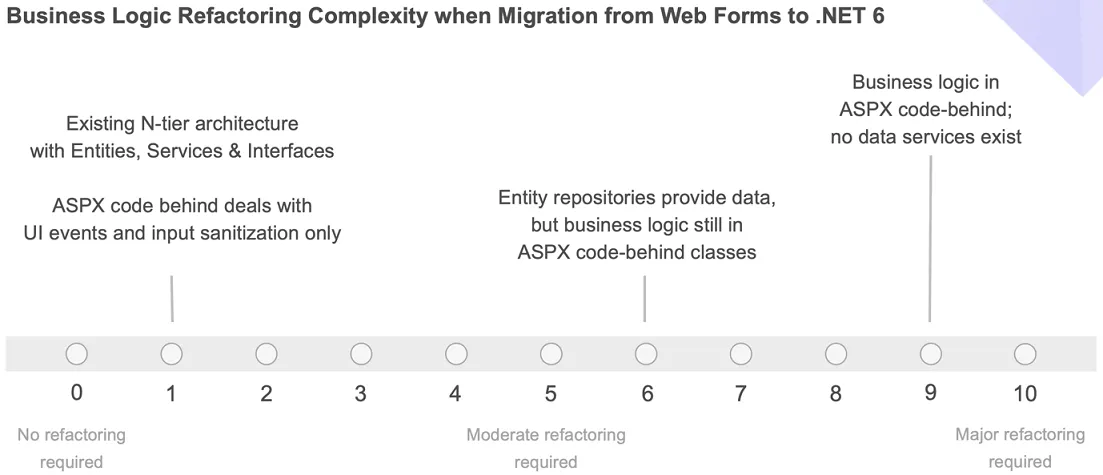

The diagram below presents a few different legacy Web Forms architectures on the refactoring complexity scale. The worst-case scenario, as described above, is when the legacy shows no segregation into tiers, and everything is written in the Web Forms UI classes. A relative middle ground complexity situation: some data access segregation exists (e.g., through Entity Framework or another ORM library), but there is no business logic segregation, leading to increased code reuse at the data layer, but major code refactoring to create a well-defined business logic layer.

Our experience shows that best-case scenarios only appear within existing N-tier architectures. Segregated entity classes, business service classes, service interfaces and other domain-specific components are often migrated to modern .NET versions with little or no change, creating maximum development speed during modernization and introducing the least amount of change (including breaking) into the system. If your legacy .NET application already follows the N-tier paradigm, you’ve done yourself a great favor. Your modern .NET application will reuse significant parts of your legacy system’s original code and component organization.

Refactor, reuse, migrate

Once you have the N-tier layering in your system sorted out, migrating your business logic from .NET Framework to modern .NET is a well-documented exercise. The typical guideline we follow is:

- Entities and interfaces to .NET Standard

- .NET Standard projects with .NET Standard dependencies

- Services to .NET 6 (or whatever latest official .NET version at the time of reading)

- External dependencies (logging, JSON serialization) to compatible versions

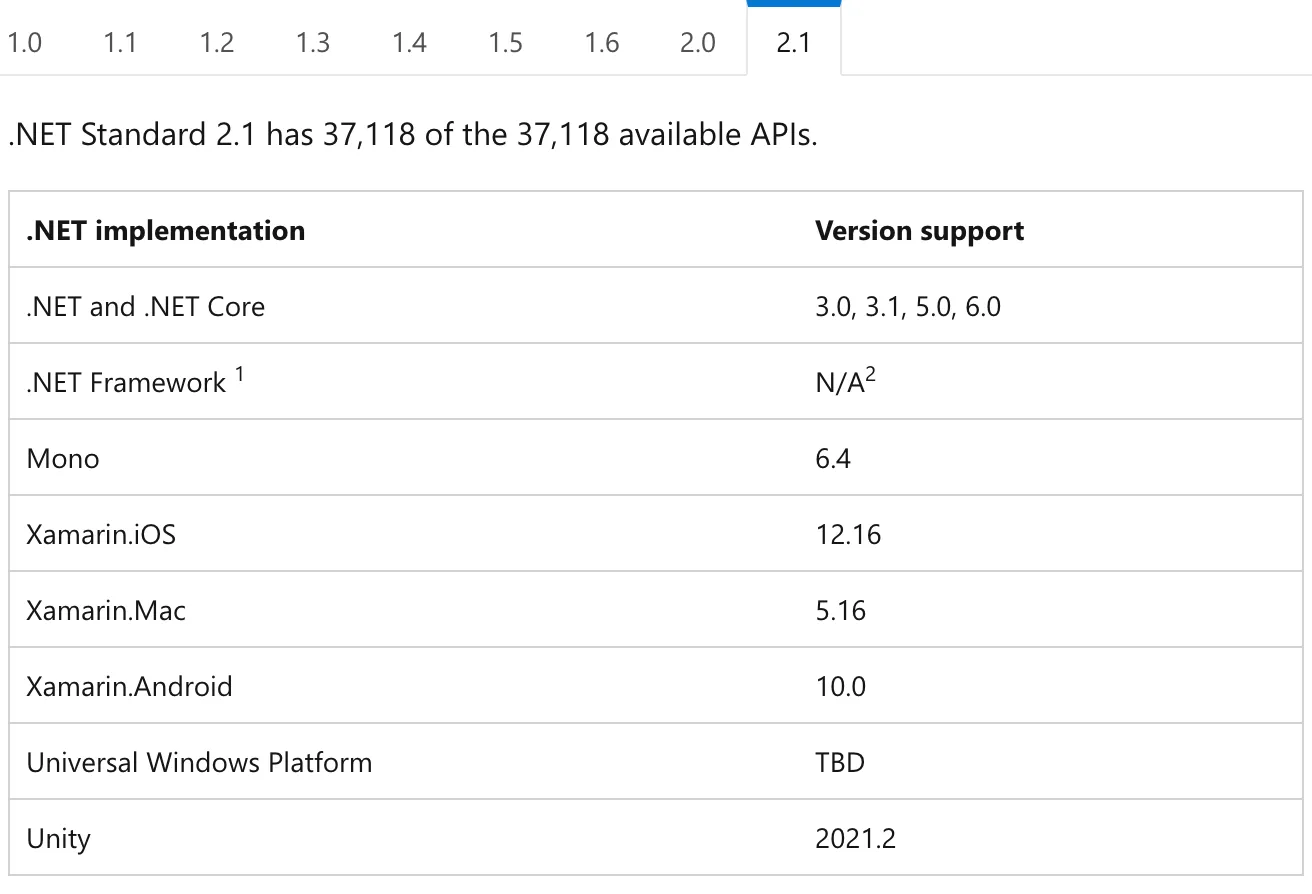

First, entities and interfaces are migrated to .NET Standard, making them interoperable with both legacy .NET Framework and any modern version of .NET in your system. Then, services and their dependencies are migrated to the latest .NET, maintaining compatibility with all .NET Standard libraries you already migrated in the previous step. When mapping between legacy and modern .NET versions, this .NET compatibility table can help you choose the correct .NET version to target:

Start migrating from the bottom of your dependency tree – usually a project named Entities, Model, BLL, or another designator for data containers in the business layer. Work your way up through the dependency graph, consecutively migrating projects to their equivalent modern .NET version, maintaining compatibility with everything migrated previously. If you hit a compatibility issue with a previously migrated dependency, you need to go back to the latter and reconsider your target .NET version. Migrating projects between .NET Framework and .NET Standard is usually straightforward but could also become quite hard, depending on the framework APIs and the NuGet packages your application uses.

Migrating NuGet dependencies is another step you should consider. Most NuGet packages are either .NET Standard assemblies or have .NET Standard alternatives. Keeping those or migrating them into your modern system is usually straightforward. If for whatever reason, you are unable to find or use a .NET Standard version of a NuGet package, you can still build your .NET Standard project against a .NET Framework dependency. You should expect compiler warnings going down that path, and keep in mind not everything may work properly at runtime. We don’t typically recommend this approach, but it could save the day if you’re looking to produce a working build quickly or until you find a longer-term replacement.

Test your domain model

The main objective of any modernization project is to produce a new, modern system that preserves and extends the capabilities of the legacy one. Both requirements must be satisfied simultaneously for the new system to replace the old one without major disruption for the user. You cannot extend a system without first preserving its original capability. Thus, existing system capabilities are “table stakes” – minimum business requirements that must be met before the new system evolves and creates additional business value.

To ensure the major refactoring, replacement, and rewriting in a modernization effort preserves the capabilities of the legacy system, developers write tests that verify the correctness of the domain model. Writing unit tests (testing individual units or components) is critical when doing business logic modernization. Unit testing frameworks like MSTest, NUnit and XUnit and software mocking libraries like Moq and JustMock facilitate the creation of unit tests and help isolate units under test from their dependencies.

The main areas of the domain model that should be tested include:

- Business services that implement domain-specific business algorithms or business rules

- Entities and business objects that include business logic or modify data under specific conditions (not just simple data containers)

- Any data flow within the business layer that modifies the input data before sending it to the data access layer for storage

The domain model must be tested both in the legacy and the modern version of the system through the same set of tests. Unit tests that validate the correctness of the domain logic in the legacy version must ensure the same logic is preserved without changes in the migrated code. Without a single set of tests, developers risk of introducing breaking changes into the domain model, failing to meet the table stake requirements in the overall modernization effort.

Since unit tests must run on both the legacy and the modern version of the domain components, they should be segregated in .NET Standard libraries. This segregation allows test execution within the legacy .NET Framework and the modern .NET runtimes.

A healthy testing strategy in a modernization project includes a combination of unit tests for the domain model and business services and a set of automated end-to-end tests that verify the critical use cases in the system. We will cover additional testing considerations in an upcoming blog post in this series.

Design the API

Your system’s public API is a major consideration when designing a modern .NET application. Depending on your legacy application’s development technology (See more aboutASP.NET MVC Vs ASP.NET Core ), you may or may not have a well-defined API layer or may need one now. Suppose you’re coming from ASP.NET Web Forms. In that case, your legacy system likely doesn’t have a separate API layer (since Web Forms is a server page paradigm), or you have a set of callback methods in ASPX code-behind files that resemble but are not segregated as a distinct API layer. If you’re modernizing that into a SPA framework with a .NET back end, you now need to design and create a full-blown JSON-based API.

At the other end of the scale, you might have a legacy Silverlight application accessing the back end through an existing JSON API exposed by your .NET back end. You’d potentially be better off reusing the existing API for your modern .NET application, extending it further with a new entity and operation endpoints.

Your application’s public API sits between the UI and business logic layers, affecting system design both upwards (UI) and downwards (business logic). Thus, understanding API requirements early and designing an API layer that efficiently meets these requirements is essential for successful modernization projects. There are many aspects of your system that affect API-level technical decisions. The most critical aspects we discuss early in the process when doing API design include:

- Data-centric or procedure-centric business logic – affects the API design pattern (REST vs. RPC-based API design)

- Pull vs. push data fetching – affects the choice of a data transport protocol for the API (HTTP-based pull vs. Web Socket-based real-time)

- “Chattiness” between the client and the server – refers to how frequently the client accesses the API to fetch additional data. It affects API design decisions related to fetching shallow entity sets (parent documents only, relations as links) vs. expanded entity sets (parent and all children documents, relations as objects)

- Existing API technologies in the legacy system (e.g., WCF services) – affects rewrite vs. adapt decisions for the modern API

The above API considerations are framework and technology agnostic – they introduce the high-level system and API design decisions that can be implemented in any development technology. In the context of modern .NET, our software architects choose between Web API (for REST and RPC over HTTP) and SignalR or gRPC (for real-time RPC-based two-way communication).

In the curious case of existing legacy API technologies like WCF, we face another dilemma – rewrite the API surface from scratch or reuse the existing API and expose it to the modern UI layer. The best approach is determined by the fate of the legacy WCF back end in relation to the overall modernization effort. If the backend is modernized entirely, we recommend rewriting the API layer to bring it up to par with the rest of the modern system architecture. If, however, the WCF backend is preserved and maintained as a separate system component, we can preserve the WCF API service layer and map it to a JSON-over-HTTP format through a thin API adapter developed separately.

The API in your modern system reflects design choices across tiers. It affects how your application works with data, how it performs business operations, and how the user interface is organized and significantly impacts your system performance. We will discuss these and additional API design considerations in the upcoming blog posts in this series, connecting them to other system design choices like the UI development paradigm, SPA frameworks, server application design patterns and system testing.

Business logic refactoring, N-tier architecture and API design are just some of the many considerations we at Resolute Software make when we embark on a software modernization journey. We are a team of software architects, consultants and technologists, who like a good challenge and make no compromise when designing efficient software applications. If you have a system in dire need of modernization, get in touch, and let’s discuss how we can help.

Did you get your copy of the ASP.NET Web Forms to Modern ASP.NET migration guide? Download it now